2026

A Collaborative Project by Travis Johns & Paulina Velázquez Solíswith False Relationships and the Extended Endings Ensemble in collaboration with Swiss composer Teresa Carrasco

Embodied Soundscapes explores the human body as both instrument and score. Through sensor-embedded garments and custom-built interactive systems, this project transforms the performers’ gestures, posture, and physiological states into evolving sonic and visual landscapes. The work invites audiences to witness music as a living organism — one that reacts to breath, motion, and touch — blurring the boundaries between composition, performance, and sculpture. In doing so, we seek to expand the expressive potential of wearable technology and demonstrate how physical presence can become the foundation of a collective, cybernetic approach to contemporary music-making.

In collaboration with Teresa Carrasco and the False Relationships and the Extended Endings Ensemble, we propose the creation and performance of a new interdisciplinary work combining composition, interactivity, sculpture, choreography, and fashion/wearable technology.

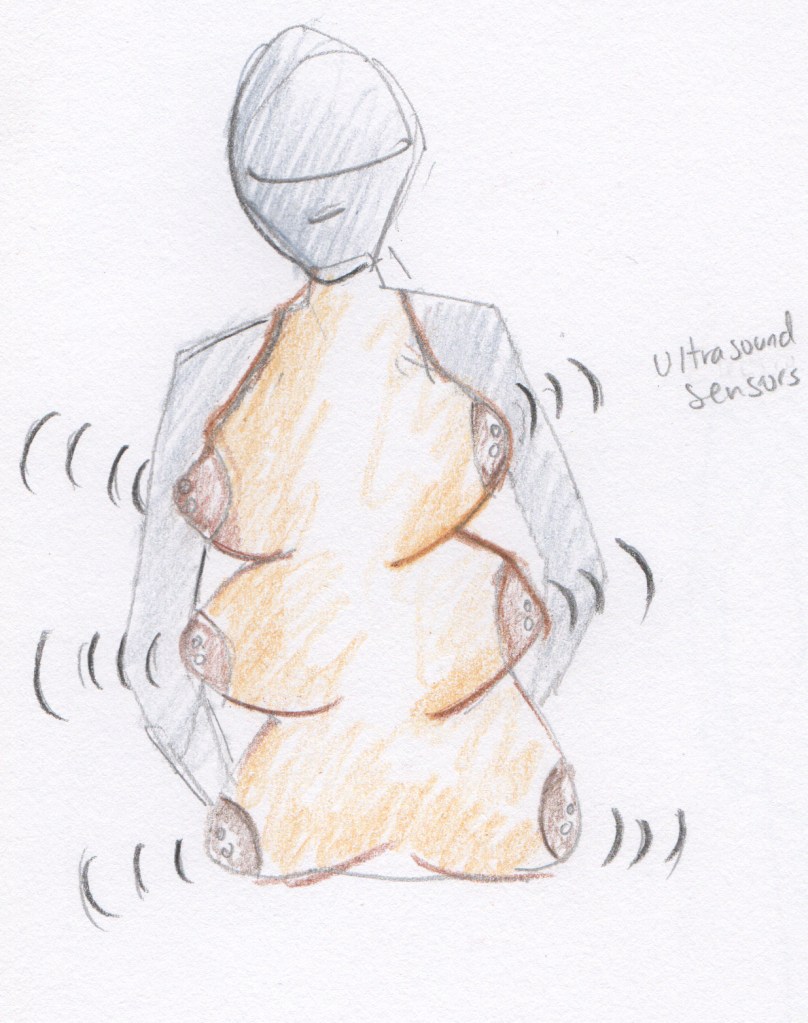

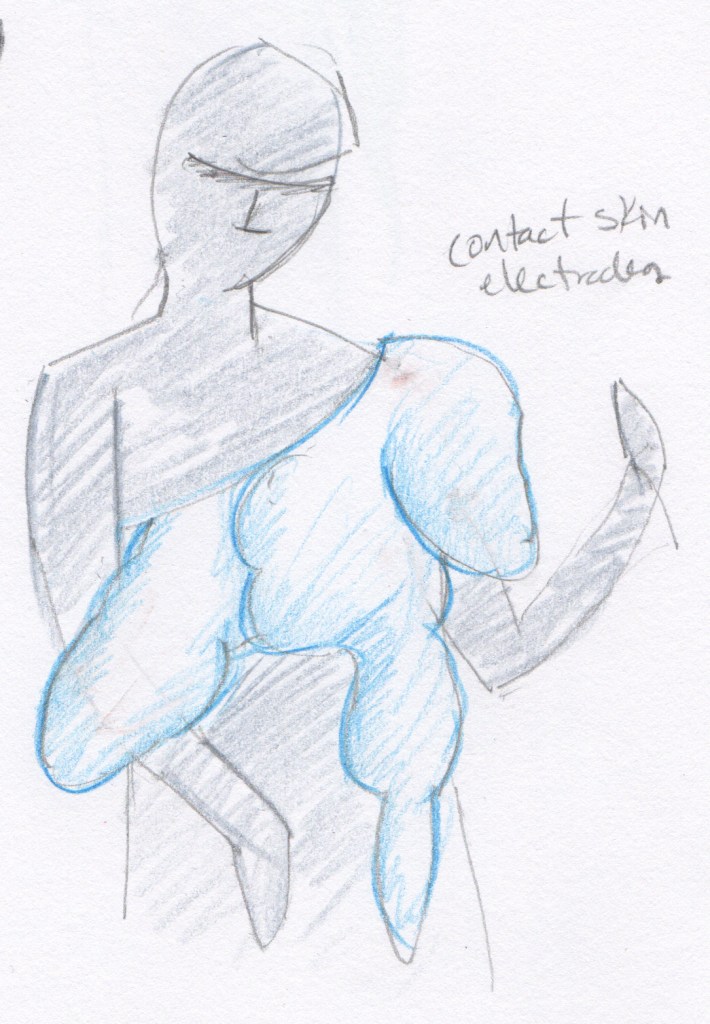

At the core of the project will be sensor-embedded garments designed for up to six ensemble members. Each garment will integrate various sensors (flex, ultrasonic, skin surface voltage, EEG), connected to ESP32 microcontrollers powered by battery packs, enabling complete freedom of movement during performance. Data from these sensors will be transmitted wirelessly to custom-designed hardware and software that will control purpose-built electromechanical sculptures, modified electric guitars and virtual reality environments.

The intention is to explore the musical potential of wearable technology within a collective, whole-body cybernetic approach to composition, in which the performers’ physicality, gestures, and even subtle mood changes will directly shape the musical and visual outcome of the performance.

Derivative Work

Biometric Studies for Solo Performer (2023)

Brainwave to solenoid (later brainwave to machine learning-derived samples) performance which served as the general springboard for this particular vein of biometric/performance-informed composition.

Cybernetic Action for Guitar (2023)

An experiment conducted with visual artist Mauricio Esquivel to determine how an EEG-Solenoid interface would react to an extreme reaction or emotion – in this case the pain experienced while being tattooed. This experiment forms the basis of a new composition, currently in progress where the score is intended to be tattooed onto the performer as they play it.

Biometric/Machine Learning Studies for Three Performers (2024)

A composition using the same code as the second iteration of this piece, albeit in triplicate. In performance, datasets are created via live-sampling, adding an additional layer of stimuli for the performers to respond to. Individual actions are determined via open-ended prompts on index cards that will guide the dynamics of interaction between ensemble, as well as via EEG-guided machine-learning reconstructed audio data taken from the performance itself. It was premiered by Tacet(i) on December 22, 2024 at the Bangkok Art and Culture Center in Bangkok, Thailand, with instrumentation for this performance set as guitar, trombone and percussion.